1. The Domain of Phonetics

Key Concepts

- The form of speech is not arbitrary but contingent on human body, cognition and culture.

- Phonological structures and patterns arise from human constraints.

- Scientific Phonetics is an experimental science that seeks explanations for the physical form of spoken language.

- There is a burgeoning industry in speech technology that seeks to bring spoken language to computers.

- Neuroscience is providing new opportunities for studying how the brain processes spoken language.

Learning Objectives

At the end of this topic the student should be able to:

- describe the domain of Phonetics as a scientific discipline

- contrast "taxonomic" and "scientific" Phonetics

- describe the idea of the speech chain as a descriptive model for speech communication, and also criticise its limitations

- identify some technological applications of Phonetics

- identify some of the key people and events in the history of the field

- contrast the goals of Phonetics and Phonology

Topics

- What does Phonetics study?

Definition

Phonetics is defined in Wikipedia as:

Phonetics (pronounced /fəˈnɛtɪks/, from the Greek: φωνή, phōnē, 'sound, voice') is a branch of linguistics that comprises the study of the sounds of human speech [...]. It is concerned with the physical properties of speech sounds [...] (phones): their physiological production, acoustic properties, auditory perception, and neurophysiological status.

"is a branch of linguistics": reminds us that speech is spoken language, and that to study Phonetics is to study one aspect of human linguistic communication.

"sounds of human speech": here 'sounds' refers to the abstract notion of a 'piece of linguistic code' used in spoken linguistic communication, not just to the physical pressure wave.

"is concerned with the physical properties": Phonetics is about the physical reality of speech production. It is a quantitative, experimental science that uses instrumental methods to explore speech communication.

"speech sounds (phones)": the term 'phone' has come to refer to possible instantiations of phonological units (phonemes) in context.

"production": the use of human biological systems for speech generation.

"acoustic properties": the physical properties of speech sound signals, as might be detected by a microphone.

"auditory perception": the perceptual properties of speech sounds, as processed through the human auditory system and perceived by the brain.

"neurophysiological status": the neuroscience behind the production and perception of speech.

There is a problem with this definition. It could be taken to mean that Phonetics is a purely descriptive endeavour: that it just answers the question 'What is speech like?' and not 'How is speech used?' or 'Why does it take the form it does?'. But Phonetics is more than descriptive; it is an experimental science that has theories and models that make testable predictions about the form of speech in different communicative situations. It legitimately addresses questions about how and why speech takes the forms it does. Phonetics also asks questions like:

- How is speech planned and realised by the vocal system?

- How is speech interpreted by the perceptual system?

- How and why do speech sounds vary across messages, across speakers, across accents, across speaking styles, across emotions?

- How do infants learn to speak and listen?

- How and why do speech sounds change over time within society?

Ohala (2010) makes the distinction between "Taxonomic Phonetics" and "Scientific Phonetics". The former is about the phonetic terminology and symbols used to describe and classify speech sounds (and has changed little since the formation of the International Phonetic Association), while the latter is an empirical science that adapts to new data, concepts and methods. "Scientific phonetics is the intellectually most exciting form of the field - and one of the most successful and rigorous within linguistics", he says. An analogous difference between taxonomy and science might be seen in Astronomy: a taxonomic Astronomy would involve cataloguing types of stars whereas a scientific Astronomy would also involve finding out how they work.

The Speech Chain

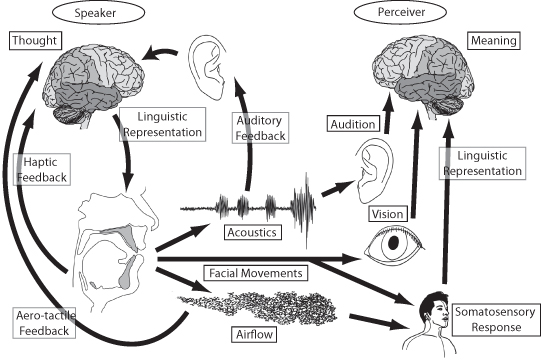

The domain of Phonetics is frequently described in terms of a diagram called "The Speech Chain". Here is one version of the speech chain diagram: [image source]

The Speech Chain shows how messages are communicated from speaker to hearer through speech, focussing on the measurable, physical properties of articulation, sound and hearing. While such diagrams provide a useful basis for discussion of the domain of phonetics, they leave out details of the processing necessary for communication to succeed. These are the type of questions that a Scientific Phonetics should be asking:

- How does the form of speech encode linguistic and paralinguistic information?

- How does variability in the form of speech relate to the communicative intentions of the speaker?

- How does a listener extract linguistic information from a highly variable and unreliable signal?

- How does the listener combine information across modalities of hearing and vision?

- How does the listener reconcile information in the signal with their own knowledge of language and of the world to uncover meaning and intentions?

- How does a speaker know how to best exploit speech for communication in any particular circumstance?

The list of topics covered in the last International Congress of Phonetic Sciences will give you some idea of the interests of Phoneticians in the 21st century.

Applications

Phonetics also provides the science behind a multi-billion dollar industry in speech technology. Phonetic ideas have value in building applications such as:

- Speech recognition. Systems for the conversion of speech to text, for spoken dialogue with computers or for executing spoken commands.

- Speech synthesis. Systems for converting text to speech or (together with natural language generation) concept to speech.

- Speaker recognition. Systems for identifying individuals or language groups by the way they speak.

- Forensic speaker comparison. Study of recordings of the speech of perpetrators of crimes to provide evidence for or against the guilt of a suspect.

- Language pronunciation teaching. Systems for the assessment of pronunciation quality, used in second language learning.

- Assessment and therapy for disorders of speech and hearing. Technologies for the assessment of communication disorders, for the provision of therapeutic procedures, or for communication aids.

- Monitoring of well-being and mood. Technologies for using changes to the voice to monitor physical and mental health.

- The relationship between Phonetics and Phonology

Broadly speaking Phonetics is about the processes of speaking and listening, while Phonology is about the patterns (paradigmatic and syntagmatic) of the underlying pronunciation elements used in language. These patterns consist of observed structures and constraints on pronunciation that seem to occur within and across languages and have effect both synchronically and diachronically.

History shows that the study of Phonology diverged from the study of Phonetics in the 1930s through the influence of the structuralists in the Prague School (see below and John Ohala's account in the Readings). Their motivation was that the contemporary taxonomic approach to Phonetics did not create a parsimonious framework for the description of these pronunciation structures and constraints. Through concepts such as the phoneme and the phonological feature, the structuralists created an abstract language for describing pronunciation inventories, constraints and rules that could be studied as the causes of language variety and language change.

Take for example, the patterning seen in typical phonological inventories. The English stops form a 3x3 table of manner and place:

English Stops Bilabial Alveolar Velar Voiced Plosive b d g Voiceless Plosive p t k Voiced Nasal m n ŋ Why is there a repeating pattern here? If pronunciation choices only arose from ensuring that we had distinctive sounds, why have patterning which actually makes sounds more similar? In other words, if Phonetics were the only influence on inventory design, wouldn't you expect that the 44 phonemes of English be equally distinctive - made up from 44 distinct articulations showing little sharing of articulatory or acoustic elements between them? Phonologists would say that these patterns arise in the inventory because of inherent structural properties of our linguistic competence, and that the study of such patterns will provide insight into the universal properties of human language.

Phonological patterns like these arise (a Phonologist would say) because phonemes are compounds of more fundamental units, just like the variety of all sub-atomic particles is explained by the Standard Model of particle Physics. And over the years there have been a number of attempts to explain this type of pattern in the phonological inventory by decomposing phonological choices into more primitive units: Jakobson's features, Kaye's phonological elements, Goldstein's articulatory gestures or Smolensky's optimal constraints.

But could there equally be a Scientific Phonetics explanation? Might phonetic similarities across phonemes be explainable in Phonetic terms? It may be the case for example that sharing gestures across phonemes might reduce the complexity of articulation, or facilitate lexical access, or aid in remembering the pronunciations of words, or make speech communication robust to talker variability. The same patterns will still be there, but they will have a scientific explanation.

It is at least conceivable that all the "patterns" and "processes" studied in Phonology all have their root in the Phonetics of speech communication. The form of speech is not arbitrary and rigid, it is instead contingent on human anatomy, its neurophysiological control, our cognitive capacities and our social structures. Language is not something outside ourselves that we need to struggle to learn to use. Spoken language was invented by humans for humans and has exactly the form it has because of the physical, physiological, psychological and social aspects of being human. Phonological patterns are complicated by language change and the random nature of variability but may nevertheless be explainable in scientific terms.

Ohala makes the point that if we are interested in the really big questions about speech, then the division between phonetics and phonology is not very helpful. If we want answers to questions such as "How did language evolve?", "Why are humans the only primates with language?", or "What are the neural representations and processes of language in the brain?" then it is only a scientific study of the physical character of linguistic communication that will allow us to discover and evaluate potential explanations. One goal of this scientific endeavour might be to show how the patterning of spoken language studied in phonology will be seen to have arisen from the neural capacities of the human brain and the physical form of the human body in combination with an exposure to human culture. We may then obtain a scientific, empirical account of Phonology.

- A history of Phonetics in people and ideas

These are some of my personal highlights (with a UCL bias) - let me have your suggestions for additions.

Date Person or Idea 19th

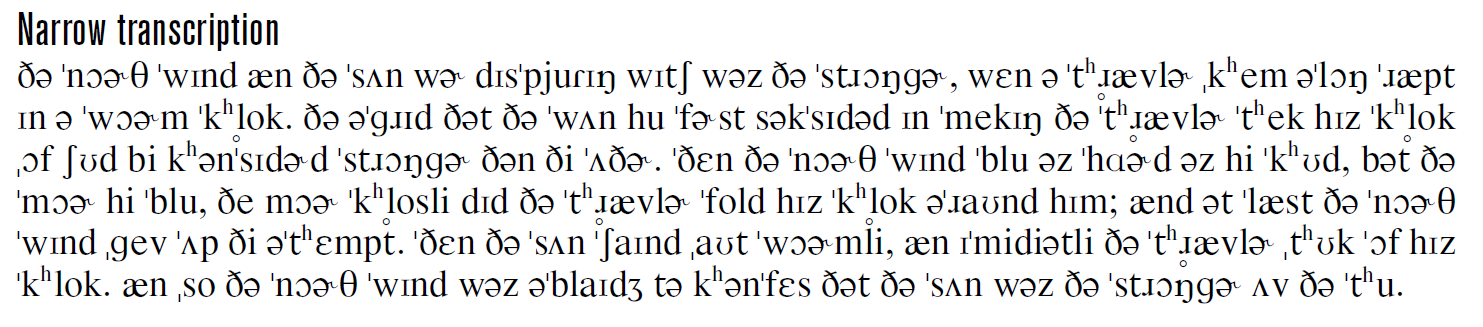

cent.Alexander Ellis (1814-90) introduced the distinction between broad and narrow phonetic transcription. The former to indicate pronunciation without fine details, the latter to 'indicate the pronunciation of any language with great minuteness'. He also based symbols on the roman alphabet, just the IPA does today.

Henry Sweet (1845-1912) introduced the idea that the fidelity of broad transcription could be based on lexical contrast, the basis of the later phonemic principle. He also transcribed the "educated London speech" of his time, giving us the first insights into Received Pronunciation.

Hermann von Helmholtz (1821-94) was a physicist who applied ideas of acoustic resonance to the vocal tract and to the hearing mechanism.

Thomas Edison (1847-1931) invented sound recording which allowed careful listening and analysis of speech for the first time.

Paul Passy (1859-1940) was a founding member and the driving force behind the International Phonetic Association (see Readings). He published the first IPA alphabet in 1888.

1900-

1929Daniel Jones (1881-1967) founded the Department of Phonetics at UCL and was its head from 1921-1947. He is famous for the first use of the term 'phoneme' in its current sense and for the invention of the cardinal vowel system for characterising vowel quality.

1930s The First International Congress of Phonetic Sciences was held in 1932 in Amsterdam.

Between 1928 and 1939, the Prague School gave birth to phonology as a separate field of study from phonetics. Key figures were Nikolai Trubetzkoy (1890-1938) and Roman Jakobson (1896-1982).

1940s Invention of the sound spectrograph at Bell Laboratories, 1946.

1950s Invention of the pattern playback (a kind of inverse spectrograph) at Haskins Laboratory, 1951. This was used for early experiments in speech perception.

Publication of R. Jakobson, G. Fant and M. Halle's Preliminaries to Speech Analysis, 1953. This presented the idea of distinctive features as a way to reconcile phonological analysis with acoustic-phonetic form.

Denis Fry (1907-1983) and Peter Denes built one of the very first automatic speech recognition systems at UCL, 1958. Watch a video here.

John R. Firth (1890-1960), working at UCL and at SOAS, introduces prosodic phonology as an alternative to monosystemic phonemic analysis.

1960s Early x-ray movies of speech collected at the cineradiographic facilty of the Wenner-Gren Research Laboratory at Nortull's Hospital, Stockholm, Sweden, 1962.

First British English speech synthesized by rule, John Holmes, Ignatius Mattingly and John Shearme, 1964:

1970s Gunnar Fant (1919-2009) publishes Acoustic Theory of Speech Production, a ground-breaking work on the physics of speech sound production, 1970.

Development of the electro-palatograph (EPG), an electronic means to measure degree of tongue-palate contact, 1972.

Development of Magnetic Resonance Imaging (MRI), which allowed safe non-invasive imaging of our bodies for the first time, 1974.

Adrian Fourcin, working at UCL, describes the Laryngograph, a non-invasive means of measuring vocal fold contact, 1977.

Graeme Clark (1935-), working in Melbourne, Australia led a team that developed the first commercial cochlear implant (bionic ear), 1978.

1980s Dennis Klatt (-1988) develops the KlattTalk text-to-speech system, which became the basis for the DecTalk product, 1983:

Famously, DECTalk has been used by Stephen Hawking for so long that he is now recognised by its synthetic voice.First automatic speech dictation system developed at IBM by Fred Jelinek, Lalit Bahl and Robert Mercer among others, 1985.

Kai-fu Lee demonstrates Sphinx, the first large-vocabulary, speaker-independent, continuous speech recognition system, 1988.

1990s Development of Electro-magnetic articulography (EMA), a means to track the motion of the articulators in speaking in real time using small coils attached to the tongue, jaw and lips.

Publication of the TIMIT corpus of phonetically transcribed speech of 630 American talkers, 1993. This has been used as the basis for much research in acoustic-phonetics.

Jim and Janet Baker launch Dragon Systems Naturally Speaking continuous speech dictation system, 1997.

Increasing use of functional MRI to study the neural basis for language production and perception, 1997.

Ken Stevens (1924-2013) publishes Acoustic Phonetics, the most complete account yet of speech acoustics, 1999.

2000s Discovery of FOXP2, the first gene shown to have language-specific actions, 2001.

Microsoft include free text-to-speech and speech-to-text applications for the first time in Windows Vista, 2006.

2010s Development of real-time MRI that can be used to image the articulators during speech, 2010. Watch a video here.

Advances in Electrocorticography (direct current measurements from the surface of the brain) allow us to study the patterns of neural excitation in the brain associated with speaking and listening in real-time. Recently there have been some astonishing new discoveries that challenge our understanding of how the brain speaks and listens, 2015.

New machine learning methods based on deep neural networks show speech recognition performance exceeding human abilities for the first time, 2016.

Readings

Essential

- Ohala, J. J. 2004. Phonetics and phonology then, and then, and now. In H. Quene & V. van Heuven (eds.), On speech and language: Studies for Sieb G. Nooteboom. LOT Occasional Series 2. 133-140. A readable history of Phonetics alongside a discussion of the different goals of Phonetics and Phonology.

- M. K. C. Macmahon (1986). The International Phonetic Association: The first 100 years. Journal of the International Phonetic Association, 16, pp 30-38. An interesting account of the early years of the International Phonetic Association that describes how the goals of Phonetics changed as it developed.

Background

- Ohala, J. J. (2010) The Relation between Phonetics and Phonology, in The Handbook of Phonetic Sciences, Second Edition (eds W. J. Hardcastle, J. Laver and F. E. Gibbon), Blackwell Publishing Ltd., Oxford, UK. [available in library]. This is an expanded version of the Ohala paper listed above.

- Michael Ashby, Andrew Faulkner and Adrian Fourcin, "Research department of speech, hearing and phonetic sciences, UCL", The Phonetician 105-106, 2012. A brief sketch of the history and activities of my department.

Language of the Week

This week's language is English, as spoken by an American speaker from Southern Michigan. [ Source material ].

Listen to the recording and complete the following tasks:

- Find 3 phonetic differences between the accent of the American English speaker above and Standard Southern British English (SSBE).

- What phonological differences are there between varieties of American English and SSBE?

Laboratory Activities

This lab will start with a refresher on the use of the IPA alphabet. It will be set up a set of three interactive quizzes that use the lab computers. Working in pairs, you will be challenged to identify symbols, sounds and Voice-Place-Manner labels as fast as you can in competition with the rest of the class. There may be prizes.

The quizzes are:

- Identify the Place and Manner of a consonant, or the Frontness/Openness of a vowel from the symbol or an audio sample.

- Identify the IPA Symbol for a consonant from a Voice-Place-Manner label, or for a vowel from a Rounding-Frontness-Openness label or from an audio sample.

- Identify the IPA Symbol for a consonant or vowel from just an audio sample.

You will then be challenged to transcribe a piece of speech in a language (probably) unknown to you. You can then compare your attempt with the "official" version.

Tutorial Activities

We will reflect upon the relationship between speaking and (hand-)writing. What Phonetic and Phonological phenomena have their analogies in writing? What do we learn about the relationship between Phonetics and Phonology from the study of writing?

Reflections

You can improve your learning by reflecting on your understanding. Come to the tutorial prepared to discuss the items below.

- What is it that is actually communicated through the speech chain?

- How might the fact that the listener can see the speaker help communication?

- How might the fact that the listener can hear himself/herself help communication?

- What is the difference between a narrow and a broad transcription? Why are both useful?

- What makes Phonetics an experimental science?

Word count: . Last modified: 10:20 08-Oct-2017.